AMIE gains vision: A research AI agent for multimodal diagnostic dialogue

May 1, 2025

Khaled Saab, Research Scientist, Google DeepMind, and Jan Freyberg, Software Engineer, Google Research

We share a first of its kind demonstration of a multimodal conversational diagnostic AI agent, multimodal AMIE.

Quick links

Language model–based AI systems such as Articulate Medical Intelligence Explorer (AMIE, our research diagnostic conversational AI agent recently published in Nature) have shown considerable promise for conducting text-based medical diagnostic conversations but the critical aspect of how they can integrate multimodal data during these dialogues remains unexplored. Instant messaging platforms are a popular tool for communication that allow static multimodal information (e.g., images and documents) to enrich discussions, and their adoption has also been reported in medical settings. This ability to discuss multimodal information is particularly relevant in medicine where investigations and tests are essential for effective care and can significantly inform the course of a consultation. Whether LLMs can conduct diagnostic clinical conversations that incorporate this more complex type of information is therefore an important area for research.

In our new work, we advance AMIE with the ability to intelligently request, interpret, and reason about visual medical information in a clinical conversation, working towards accurate diagnosis and management plans. To this end, building on multimodal Gemini 2.0 Flash as the core component, we developed an agentic system that optimizes its responses based on the phase of the conversation and its evolving uncertainty regarding the underlying diagnosis. This combination resulted in a history-taking process that better emulated the structure of history-taking that is common in real-world clinical practice.

Through an expert evaluation adapting Objective Structured Clinical Examinations (OSCEs), a standardized assessment used globally in medical education, we compared AMIE’s performance to primary care physicians (PCPs) and evaluated its behaviors on a number of multimodal patient scenarios. Going further, preliminary experiments with Gemini 2.5 Flash indicate the possibility of improving AMIE more so by integrating the latest base model.

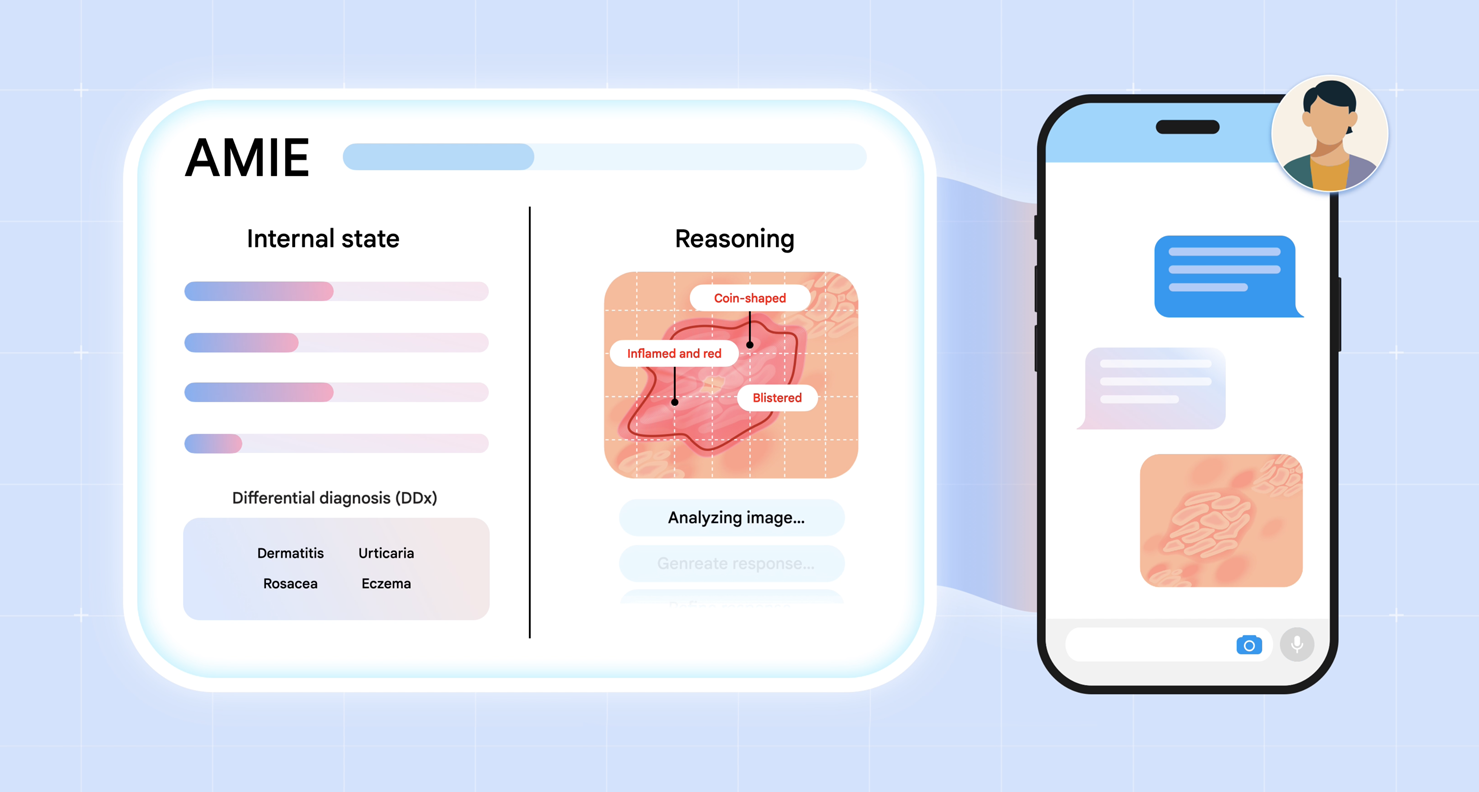

We introduce multimodal AMIE: our diagnostic conversational AI that can intelligently request, interpret and reason about visual medical information during a clinical diagnostic conversation. We integrate multimodal perception and reasoning into AMIE through the combination of natively multimodal Gemini models and our state-aware reasoning framework.

Advancing AMIE for multimodal reasoning

We introduce two key advances to AMIE. First, we developed a multimodal, state-aware reasoning framework. This allows AMIE to adapt its responses based on its internal state, which captures its knowledge about the patient at a given point in the conversation, and to gather information efficiently and effectively to derive appropriate diagnoses (e.g., requesting multimodal information, such as skin photos, to resolve any gaps in its knowledge). Second, to inform key design choices in the AMIE system, we created a simulation environment for dialogue evaluation in which AMIE converses with simulated patients based on multimodal scenarios grounded in real-world datasets, such as the SCIN dataset of dermatology images.

Emulating history taking of experienced clinicians: State-aware reasoning

Real clinical diagnostic dialogues follow a structured yet flexible path. Clinicians methodically gather information as they form potential diagnoses. They can strategically request and interpret further details from a wide variety of multimodal data (for example, skin photos, lab results or ECG measurements). In light of such new evidence, they can ask appropriate clarification questions to resolve information gaps and delineate diagnostic possibilities.

To equip AMIE with a similar dialogue capability, we introduce a novel state-aware phase transition framework that orchestrates the conversation flow. Leveraging Gemini 2.0 Flash, this framework dynamically adapts AMIE's responses based on intermediate model outputs that reflect the evolving patient state, diagnostic hypotheses, and uncertainty. This enables AMIE to request relevant multimodal artifacts when needed, interpret their findings accurately, integrate this information seamlessly into the ongoing dialogue, and use it to refine diagnoses and guide further questioning. This emulates the structured, adaptive reasoning process used by experienced clinicians.

AMIE employs a state-aware dialogue framework that progresses through three distinct phases, each with a clear objective: History Taking, Diagnosis & Management, and Follow-up. AMIE's dynamic internal state — reflecting its evolving understanding of the patient, diagnoses, and knowledge gaps — drives its actions within each phase (e.g., information gathering and providing explanations to the patient). Transitions between phases are triggered when the system assesses that the objectives of the current phase have been met.

An example of state-aware reasoning in practice during a simulated consultation with a patient-actor. At the start of this part of the interaction, AMIE is aware of the gaps in its knowledge about the case: the lack of images. AMIE requests these images, and once provided, updates its knowledge as well as its differential diagnosis.

Accelerating development: A robust simulation environment

To enable rapid iteration and robust automated assessment, we developed a comprehensive simulation framework:

- We generate realistic patient scenarios, including detailed profiles and multimodal artifacts derived from datasets like PTB-XL and SCIN, augmented with plausible clinical context using Gemini models with web search.

- Then, we simulate turn-by-turn multimodal dialogues between AMIE and a patient agent adhering to the scenario.

- Lastly, we evaluate these simulated dialogues, using an auto-rater agent, against predefined clinical criteria such as diagnostic accuracy, information gathering effectiveness, management plan appropriateness, and safety (e.g., hallucination detection).

Overview of our simulation environment for multimodal dialogue evaluation.

Expert evaluation: The multimodal virtual OSCE study

To evaluate multimodal AMIE, we carried out a remote expert study with 105 case scenarios where validated patient actors engaged in conversations with AMIE or primary care physicians (PCPs) in the style of an OSCE study. The sessions were performed through a chat interface where patient actors could upload multimodal artifacts (e.g., skin photos), mimicking the functionality of multimedia instant messaging platforms. We introduced a framework for evaluating multimodal capability in the context of diagnostic dialogues, along with other clinically meaningful metrics, such as history-taking, diagnostic accuracy, management reasoning, communication skills, and empathy.

Overview of our simulation environment for multimodal dialogue evaluation.

Results: AMIE matches or exceeds PCP performance in multimodal consultations

Our study demonstrated that AMIE can outperform PCPs in interpreting multimodal data in simulated instant-messaging consultation. It also scored higher in other key indicators of consultation quality, such as diagnostic accuracy, management reasoning, and empathy. AMIE produced more accurate and more complete differential diagnoses than PCPs in this research setting:

Top-k accuracy of differential diagnosis (DDx). AMIE and primary care physicians (PCPs) are compared across 105 scenarios with respect to the ground truth diagnosis. Upon conclusion of the consultation, both AMIE and PCPs submit a differential diagnosis list (at least 3, up to 10 plausible items, ordered by likelihood).

We asked both patient actors and specialist physicians in dermatology, cardiology, and internal medicine to rate the conversations on a number of scales. We found that AMIE was rated more highly on average in the majority of our evaluation rubrics. Notably, specialists also assigned higher scores to the quality of image interpretation and reasoning along with other key attributes of effective medical conversations, such as the completeness of differential diagnosis, the quality of management plans, and the ability to escalate (e.g., for urgent treatment) appropriately. The degree to which AMIE hallucinated (misreported) findings that are not consistent with the provided image artifacts was deemed to be statistically indistinguishable from the degree of PCP hallucinations. From the patient actors’ perspective, AMIE was often perceived to be more empathetic and trustworthy. More comprehensive findings can be found in the paper.

Relative performance of PCPs and AMIE on other key OSCE axes as assessed by specialist physicians and patient actors. The red segments represent the proportions of patient scenarios for which AMIE’s dialogues were rated more highly than the PCPs on the respective axes. The asterisks represent statistical significance (*: p<0.05, **: p<0.01, ***: p<0.01, n.s.: not significant)

Evolving the base model: Preliminary results with Gemini 2.5 Flash

The capabilities of Gemini models are continuously advancing, so how would multimodal AMIE's performance change when leveraging a newer, generally more capable base model? To investigate this, we conducted a preliminary evaluation using our dialogue simulation framework, comparing the performance of multimodal AMIE built upon the new Gemini 2.5 Flash model against the current Gemini 2.0 Flash version rigorously validated in our main expert study.

Comparison of multimodal AMIE performance using Gemini 2.0 Flash vs. Gemini 2.5 Flash as the base model, evaluated via the automated simulation framework. Scores represent performance on key clinical criteria (* indicates statistically significant difference (p < 0.05) and “n.s.” indicates non-significant difference).

The results as summarized in the chart above suggest possibilities for further improvements. Notably, the AMIE variant using Gemini 2.5 Flash demonstrated statistically significant gains in Top-3 Diagnosis Accuracy (0.65 vs. 0.59) and Management Plan Appropriateness (0.86 vs. 0.77). On the other hand, performance on Information Gathering remained consistent (0.81), and Non-Hallucination Rate was maintained at its current high level (0.99). These preliminary findings suggest that future iterations of AMIE could benefit from advances in the underlying base models, potentially leading to even more accurate and helpful diagnostic conversations.

We emphasize, however, that these findings are from automated evaluations and that rigorous assessment through expert physician review is essential to confirm these performance benefits.

Limitations and future directions

- Importance of real-world validation: This study explores a research-only system in an OSCE-style evaluation using patient actors, which substantially under-represents the complexity and extent of multimodal data, diseases, patient presentations, characteristics and concerns of real-world care. It also under-represents the considerable expertise of clinicians as it occurs in an unfamiliar setting without usual practice tools and conditions. It is important to interpret the research with appropriate caution and avoid overgeneralization. Continued evaluation studies and responsible development are paramount in such research towards building AI capabilities that might safely and effectively augment healthcare delivery. Further research is therefore needed before real-world translation to safely improve our understanding of the potential impacts of AMIE on clinical workflows and patient outcomes as well as to characterise and improve safety and reliability of the system under real-world constraints and challenges. As a first step towards this, we are already embarking on a prospective consented research study with Beth Israel Deaconess Medical Center that will evaluate AMIE in a real clinical setting.

- Real-time audio-video interaction: In telemedical practice, physicians and patients more commonly have richer real-time multimodal information with voice-based interaction over video calls. Chat-based interactions are less common and inherently limit the physician and patients’ ability to share non-verbal cues, perform visual assessments and conduct guided examinations, all of which are readily available and are often essential for providing high-quality care in remote consultations. Development and evaluation of such real-time audio-video–based interaction for AMIE remains important future work.

- Evolution of the AMIE system: The new multimodal capability introduced here complements other ongoing advances, such as the capability for longitudinal disease management reasoning we recently shared. These milestones chart our progress towards a unified system that continually incorporates new, rigorously evaluated capabilities important for conversational AI in healthcare.

Conclusion: Towards more capable and accessible AI in healthcare

The integration of multimodal perception and reasoning marks a helpful step forward for capabilities of conversational AI in medicine. By enabling AMIE to "see" and interpret the kinds of visual and documentary evidence crucial to clinical practice, powered by the advanced capabilities of Gemini, this research demonstrates the AI capabilities needed to more effectively assist patients and clinicians in high-quality care. Our research underscores our commitment to responsible innovation with rigorous evaluations toward real-world applicability and safety.

Acknowledgements

The research described here is joint work across many teams at Google Research and Google DeepMind. We are grateful to all our co-authors: CJ Park, Tim Strother, Yong Cheng, Wei-Hung Weng, David Stutz, Nenad Tomasev, David G.T. Barrett, Anil Palepu, Valentin Liévin, Yash Sharma, Abdullah Ahmed, Elahe Vedadi, Kimberly Kanada, Cìan Hughes, Yun Liu, Geoff Brown, Yang Gao, S. Sara Mahdavi, James Manyika, Katherine Chou, Yossi Matias, Kat Chou, Avinatan Hassidim, Dale R. Webster, Pushmeet Kohli, S. M. Ali Eslami, Joëlle Barral, Adam Rodman, Vivek Natarajan, Mike Schaekermann, Tao Tu, Alan Karthikesalingam, and Ryutaro Tanno.

-

Labels:

- Generative AI

- Health & Bioscience