# get OSM data

library(osmar)

library(maptools)

rotewandsteig <- get_osm(way(166274005), full = T)

sp_rotewandsteig <- as_sp(rotewandsteig, what = "lines")

# convert to KML

kmlLine(sp_rotewandsteig@lines[[1]], kmlfile = "rotewandsteig.kml",

lwd = 3, col = "blue", name = "Rotewandsteig")

# view it

shell.exec("rotewandsteig.kml")

26 Sept 2014

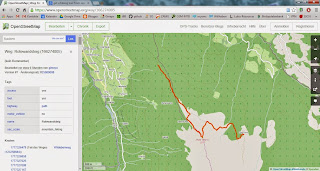

Make a KML-File from an OpenStreetMap Trail

Ever wished to use a trail on OSM on your GPS or smartphone? With this neat little R-Script this can easily be done. You'll just need to search OpenStreetMap for the ID of the trail (way), put this as argument to osmar::get_osm, convert to KML and you're good to go!

4 May 2014

R GIS: Function to Reverse KML Paths

This is a function I wrote up for reversing KML-paths. The paths within a KML can be partially matched by their name-tags

## name: ReverseKmlPath

## use: Reverse KML-pathsby matching their Name tags

## arguments: PATH_TO_DOC, the path to the KML-file

## NAME, the value of the name tag, function uses partial matching!

## 'Trail_xyz' will be matched by 'rail'

## requirements: KML-structure with Placemarks containing a and a tag

## author: Kay Cichini

## date: 01-05-2014

## license: CC-BY-NC-SA

ReverseKmlPath <- function(PATH_TO_DOC, NAMES) {

require(XML)

doc <- xmlInternalTreeParse(PATH_TO_DOC)

if (xmlNamespaceDefinitions(doc)[[1]]$uri == "http://www.opengis.net/kml/2.2") {

namespaces <- c(kml = "http://www.opengis.net/kml/2.2")

flag <- 1

} else {

if (xmlNamespaceDefinitions(doc)[[1]]$uri == "http://earth.google.com/kml/2.0") {

namespaces <- c(kml0 = "http://earth.google.com/kml/2.0")

flag <- 0

} else {

stop ("Stopped!: Check namespace issue..")

}

}

for (NAME in NAMES) {

if (flag) {

query <- paste0("//kml:Placemark[contains(kml:name,'", sprintf("%s", NAME), "'", ")]//kml:coordinates")

} else {

query <- paste0("//kml0:Placemark[contains(kml0:name,'", sprintf("%s", NAME), "'", ")]//kml0:coordinates")

}

coords <- tryCatch(getNodeSet(doc, query, namespaces),

error = function(e) message(paste("\nError: *", NAME, "* was NOT successfully matched\n")))

for (i in length(coords)) {

#grab coordinates from node and reverse order

rev_coord_vector <- rev(unlist(strsplit(gsub("\\t|\\n", "", xmlValue(coords[[i]])), "\\s")))

rev_coord_string <- paste(rev_coord_vector, collapse = " ")

# re-insert reversed line-string:

xmlValue(coords[[i]]) <- rev_coord_string

# message

if (flag) {

query <- paste0("//kml:Placemark[contains(kml:name,'", sprintf("%s", NAME), "'", ")]//kml:name")

} else {

query <- paste0("//kml0:Placemark[contains(kml0:name,'", sprintf("%s", NAME), "'", ")]//kml0:name")

}

match <- xmlValue(getNodeSet(doc, query, namespaces)[[i]])

message(paste0("matched name: ", match, "\n..."))

}

}

# save:

message("Reversed paths saved to:")

saveXML(doc, paste0(dirname(PATH_TO_DOC), "/reversed_", basename(PATH_TO_DOC)),

prefix = newXMLCommentNode("This file was created with the R-package XML::saveXML, see: "))

}

## not run:

tf <- tempfile(fileext = ".kml")

download.file("http://dev.openlayers.org/releases/OpenLayers-2.13.1/examples/kml/lines.kml", tf, mode = "wb")

ReverseKmlPath( PATH_TO_DOC = tf, NAMES = c("Absolute", "Relative") )

shell.exec(tf)

shell.exec(paste0(dirname(tf), "/reversed_", basename(tf)))

3 May 2014

R GIS: Generalizer for KML Paths

I'm posting a recent project's spin-off, which is a custom line-generalizer which I used for huge KML-paths. Anyone with a less clumpsy approach?

## line generalizing function: takes two vectors of with x/ycoords

## and return ids of x/y elements which distance to its next element

## is shorter than the average distance between consecutive vertices

## multiplied by 'fac'

check_dist <- function(x, y, fac) {

dm <- as.matrix(dist(cbind(x, y)))

## supradiagonal holds distance from 1st to 2nd, 2nd to 3rd, etc. element

d <- diag(dm[-1, -ncol(dm)])

mean_dist <- mean(d)

keep <- logical()

## allways keep first..

keep[1] <- T

for (i in 1:(length(x) - 2)) {

keep[i + 1] <- (d[i] > mean_dist * fac)

message(paste0("Distance from item ", i, " to item ", i + 1, " is: ", d[i]))

}

message(paste0("Treshold is: ", mean_dist * fac))

cat("--\n")

## .. and always keep last

keep[length(x)] <- T

return(keep)

}

## Testing function check_dist:

x <- rnorm(5)

y <- rnorm(5)

(keep <- check_dist(x, y, 1.2))

plot(x, y)

lines(x[keep], y[keep], lwd = 4, col = "green")

lines(x, y, lwd = 1, col = "red")

text(x, y + 0.1, labels = c(1:length(x)))

## exclude vertices by generalization rule. coordinate-nodes with low number of vertices,

## segments with less than 'min_for_gen' vertices will not be simplified, in any case coordinates will be

## rounded to 5-th decimal place

generalize_kml_contour_node <- function(node, min_for_gen, fac) {

require(XML)

LineString <- xmlValue(node, trim = T)

LineStrSplit <- strsplit(unlist(strsplit(LineString, "\\s")), ",")

# filter out empty LineStrings which result from strsplit on '\\s'

LineStrSplit <- LineStrSplit[sapply(LineStrSplit, length) > 0]

# all 3 values are required, in case of error see for missing z-values:

x <- round(as.numeric(sapply(LineStrSplit, "[[", 1, simplify = T)), 5)

y <- round(as.numeric(sapply(LineStrSplit, "[[", 2, simplify = T)), 5)

z <- round(as.numeric(sapply(LineStrSplit, "[[", 3, simplify = T)), 5)

# for lines longer than 'min_for_gen' vertices, generalize LineStrings

if (length(x) >= min_for_gen) {

keep <- check_dist(x, y, fac)

x <- x[keep]

y <- y[keep]

z <- z[keep]

xmlValue(node) <- paste(paste(x, y, z, sep = ","), collapse = " ")

# for all other cases, insert rounded values

} else {

xmlValue(node) <- paste(paste(x, y, z, sep = ","), collapse = " ")

}

}

## mind to use the appropiate namespace definition: alternatively use:

## c(kml ='http://opengis.net/kml/2.2')

kml_generalize <- function(kml_file, min_for_gen, fac) {

doc <- xmlInternalTreeParse(kml_file)

nodes <- getNodeSet(doc, "//kml:LineString//kml:coordinates", c(kml = "http://earth.google.com/kml/2.0"))

mapply(generalize_kml_contour_node, nodes, min_for_gen, fac)

saveXML(doc, paste0(dirname(kml_file), "/simpl_", basename(kml_file)))

}

## get KML-files and generalize them

kml_file <- tempfile(fileext = ".kml")

download.file("http://dev.openlayers.org/releases/OpenLayers-2.13.1/examples/kml/lines.kml",

kml_file, mode = "wb")

kml_generalize(kml_file, 5, 0.9)

shell.exec(kml_file)

shell.exec(paste0(dirname(kml_file), "/simpl_", basename(kml_file)))17 Mar 2014

Download all Documents from Google Drive with R

A commentator on my blog recently asked if it is possible to retrieve all direct links to your Google Documents. And indeed it can be very easily done with R, just like so:

# you'll need RGoogleDocs (with RCurl dependency..)

install.packages("RGoogleDocs", repos = "http://www.omegahat.org/R", type="source")

library(RGoogleDocs)

gpasswd = "mysecretpassword"

auth = getGoogleAuth("kay.cichini@gmail.com", gpasswd)

con = getGoogleDocsConnection(auth)

CAINFO = paste(system.file(package="RCurl"), "/CurlSSL/ca-bundle.crt", sep = "")

docs <- getDocs(con, cainfo = CAINFO)

# get file references

hrefs <- lapply(docs, function(x) return(x@access["href"]))

keys <- sub(".*/full/.*%3A(.*)", "\\1", hrefs)

types <- sub(".*/full/(.*)%3A.*", "\\1", hrefs)

# make urls (for url-scheme see: http://techathlon.com/download-shared-files-google-drive/)

# put format parameter for other output formats!

pdf_urls <- paste0("https://docs.google.com/uc?export=download&id=", keys)

doc_urls <- paste0("https://docs.google.com/document/d/", keys, "/export?format=", "txt")

# download documents with your browser

gdoc_ids <- grep("document", types)

lapply(gdoc_ids, function(x) shell.exec(doc_urls[x]))

pdf_ids <- grep("pdf", types, ignore.case = T)

lapply(pdf_ids, function(x) shell.exec(pdf_urls[x]))

3 Mar 2014

Use Case: Make Contour Lines for Google Earth with Spatial R

Here's comes a script I wrote for creating contour lines in KML-format to be used with Google Earth http://github.com/gimoya/theBioBucket-Archives/blob/master/R/contours_for_google_earth.RIf you want to check or just use the datasets I created for the Alps region, you can download it here: http://terrain-overlays.blogspot.co.at/index.html

1 Mar 2014

Use GDAL from R Console to Split Raster into Tiles

When working with raster datasets I often encounter performance issues caused by the large filesizes. I thus wrote up a little R function that invokes gdal_translate which would split the raster into parts which makes subsequent processing more CPU friendly. I didn't use built-in R functions simply because performance is much better when using gdal from the command line..

The screenshot to the left shows a raster in QGIS that was split into four parts with the below script.

The screenshot to the left shows a raster in QGIS that was split into four parts with the below script.

## get filesnames (assuming the datasets were downloaded already.

## please see http://thebiobucket.blogspot.co.at/2013/06/use-r-to-bulk-download-digital.html

## on how to download high-resolution DEMs)

setwd("D:/GIS_DataBase/DEM")

files <- dir(pattern = ".hgt")

## function for single file processing mind to replace the PATH to gdalinfo.exe!

## s = division applied to each side of raster, i.e. s = 2 gives 4 tiles, 3 gives 9, etc.

split_raster <- function(file, s = 2) {

filename <- gsub(".hgt", "", file)

gdalinfo_str <- paste0("\"C:/OSGeo4W64/bin/gdalinfo.exe\" ", file)

# pick size of each side

x <- as.numeric(gsub("[^0-9]", "", unlist(strsplit(system(gdalinfo_str, intern = T)[3], ", "))))[1]

y <- as.numeric(gsub("[^0-9]", "", unlist(strsplit(system(gdalinfo_str, intern = T)[3], ", "))))[2]

# t is nr. of iterations per side

t <- s - 1

for (i in 0:t) {

for (j in 0:t) {

# [-srcwin xoff yoff xsize ysize] src_dataset dst_dataset

srcwin_str <- paste("-srcwin ", i * x/s, j * y/s, x/s, y/s)

gdal_str <- paste0("\"C:/OSGeo4W64/bin/gdal_translate.exe\" ", srcwin_str, " ", "\"", file, "\" ", "\"", filename, "_", i, "_", j, ".tif\"")

system(gdal_str)

}

}

}

## process all files and save to same directory

mapply(split_raster, files, 2)

5 Feb 2014

Use Case: Spatial R & Google Earth for Terrain Analyses

I'd like to share code that uses spatial R and Google Earth for terrain analyses. In this example I took SRTM data at 1" resolution from http://www.viewfinderpanoramas.org/dem3.html#alps read it into R did a little processing and finally wrapped it up in a KML-file that I use as ground-overlay in Google Earth. In fact I eventually converted it into a sqlitedb with MAPC2MAPC for usage on a mobile device.

See the code here on github..

See the code here on github..

20 Jan 2014

Get No. of Google Search Hits with R and XML

UPDATE: Thanks to Max Ghenis for updating my R-script which I wrote a while back - the below R-script can now be used again for pulling the number of hits from Google-Search.

GoogleHits <- function(input)

{

require(XML)

require(RCurl)

url <- paste("https://www.google.com/search?q=\"",

input, "\"", sep = "")

CAINFO = paste(system.file(package="RCurl"), "/CurlSSL/ca-bundle.crt", sep = "")

script <- getURL(url, followlocation = TRUE, cainfo = CAINFO)

doc <- htmlParse(script)

res <- xpathSApply(doc, '//*/div[@id="resultStats"]', xmlValue)

cat(paste("\nYour Search URL:\n", url, "\n", sep = ""))

cat("\nNo. of Hits:\n")

return(as.integer(gsub("[^0-9]", "", res)))

}

# Example:

GoogleHits("R%Statistical%Software")

lapply(list_of_search_terms, GoogleHits)

16 Sept 2013

R GIS: Polygon Intersection with gIntersection{rgeos}

A short tutorial on doing intersections in R GIS. gIntersection{rgeos} will pick the polygons of the first submitted polygon contained within the second poylgon - this is done without cutting the polygon's edges which cross the clip source polygon. For the function that I use to download the example data, url_shp_to_spdf() please see HERE.

library(rgeos)

library(dismo)

URLs <- c("http://gis.tirol.gv.at/ogd/umwelt/wasser/wis_gew_pl.zip", # all water bodies in Tyrol

"http://gis.tirol.gv.at/ogd/umwelt/wasser/wis_tseepeicher_pl.zip") # only artificial..

y <- lapply(URLs, url_shp_to_spdf)

z <- unlist(unlist(y))

a <- getData('GADM', country = "AT", level = 2)

b <- a[a$NAME_2=="Innsbruck Land", ] # political district's boundaries

c <- spTransform(b, z[[1]]@proj4string) # (a ring polygon)

z1_c <- gIntersection(z[[1]], c, byid = TRUE)

z2_c <- gIntersection(z[[2]], c, byid = TRUE)

plot(c)

plot(z1_c, lwd = 5, border = "red", add = T)

plot(z2_c, lwd = 5, border = "green", add = T)

plot(z[[1]], border = "blue", add = T) # I plot this on top, so it will be easier to identify

plot(z[[2]], border = "brown", add = T)

Batch Downloading Zipped Shapefiles with R

Here's a function I use to download multiple zipped shapefiles from url and load them to the workspace:

URLs <- c("http://gis.tirol.gv.at/ogd/umwelt/wasser/wis_gew_pl.zip",

"http://gis.tirol.gv.at/ogd/umwelt/wasser/wis_tseepeicher_pl.zip")

url_shp_to_spdf <- function(URL) {

require(rgdal)

wd <- getwd()

td <- tempdir()

setwd(td)

temp <- tempfile(fileext = ".zip")

download.file(URL, temp)

unzip(temp)

shp <- dir(tempdir(), "*.shp$")

lyr <- sub(".shp$", "", shp)

y <- lapply(X = lyr, FUN = function(x) readOGR(dsn=shp, layer=lyr))

names(y) <- lyr

unlink(dir(td))

setwd(wd)

return(y)

}

y <- lapply(URLs, url_shp_to_spdf)

z <- unlist(unlist(y))

# finally use it:

plot(z[[1]])

Follow Up on Spatial Overlays with R - Getting Altitude for a Set of Points

A short follow up on a previous post on spatial overlays with R.

library(sp)

library(dismo)

# some addresses in Austria

pts <- geocode(c("Aldrans, Grubenweg", "Wien, Stephansdom", "Salzburg, Mozartplatz"))

# make pts spatial

coords <- SpatialPoints(pts[, c("longitude", "latitude")])

spdf_pts <- SpatialPointsDataFrame(coords, pts)

# assign CRS/projection (which is WGS 1984)

crs <- CRS(" +proj=longlat +datum=WGS84 +ellps=WGS84 +towgs84=0,0,0")

proj4string(spdf_pts) <- crs

# spatial data to extract (altitude)

alt <- getData('alt', country = "AT")

# convert alt from raster to grid (needed for over::sp)

# and assign CRS (which is the same as spdf_pts, see > alt@crs)

# don't mind warning - the CRS is the same..

spdf_alt <- as(alt, 'SpatialGridDataFrame')

proj4string(spdf_alt) <- crs

# view

plot(alt)

# plot pts on top

plot(spdf_pts, cex = 2, col = 2, add = T)

# check data

str(spdf_pts)

str(spdf_alt)

# get the raster/pixel/grid data (> ?over):

cbind(spdf_pts$interpretedPlace, over(spdf_pts, spdf_alt))

# result:

# spdf_pts$interpretedPlace AUT_msk_alt

# 1 Grubenweg, 6071 Aldrans, Austria 736

# 3 Saint Stephen's Vienna, Stephansplatz 1, 1010 Vienna, Austria 183

# 2 Mozartplatz, 5020 Salzburg, Austria 450

10 Sept 2013

Loading Multiple Shapefiles to the R-Console Simultaneously

A quick tip on how to load multiple shapefiles (point shapefiles, i.e.) to the R console in one go:

library(maptools)

# get all files with the .shp extension from working directory

setwd("D:/GIS_DataBase/GIS_Tirol/Tirol_Verbreitungskarten/Verbreitungs_Daten")

shps <- dir(getwd(), "*.shp")

# the assign function will take the string representing shp and turn it into a variable

# which holds the spatial points data

for (shp in shps) assign(shp, readShapePoints(shp))

plot(get(shp[1])) # i.e.

# ...done

19 Aug 2013

Text Mining with R - Comparing Word Counts in Two Text Documents

Here's what I came up with to compare word counts in two pieces of text. If you got any idea, I'd love to learn about alternatives!

## a function that compares word counts in two texts

wordcount <- function(x, y, stem = F, minlen = 1, marg = F) {

require(tm)

x_clean <- unlist(strsplit(removePunctuation(x), "\\s+"))

y_clean <- unlist(strsplit(removePunctuation(y), "\\s+"))

x_clean <- tolower(x_clean[nchar(x_clean) >= minlen])

y_clean <- tolower(y_clean[nchar(y_clean) >= minlen])

if ( stem == T ) {

x_stem <- stemDocument(x_clean)

y_stem <- stemDocument(y_clean)

x_tab <- table(x_stem)

y_tab <- table(y_stem)

cnam <- sort(unique(c(names(x_tab), names(y_tab))))

z <- matrix(rep(0, 3*(length(cnam)+1)), 3, length(cnam)+1, dimnames=list(c("x", "y", "rowsum"), c(cnam, "colsum")))

z["x", names(x_tab)] <- x_tab

z["y", names(y_tab)] <- y_tab

z["rowsum",] <- colSums(z)

z[,"colsum"] <- rowSums(z)

ifelse(marg == T, return(t(z)), return(t(z[1:dim(z)[1]-1, 1:dim(z)[2]-1])))

} else {

x_tab <- table(x_clean)

y_tab <- table(y_clean)

cnam <- sort(unique(c(names(x_tab), names(y_tab))))

z <- matrix(rep(0, 3*(length(cnam)+1)), 3, length(cnam)+1, dimnames=list(c("x", "y", "rowsum"), c(cnam, "colsum")))

z["x", names(x_tab)] <- x_tab

z["y", names(y_tab)] <- y_tab

z["rowsum",] <- colSums(z)

z[,"colsum"] <- rowSums(z)

ifelse(marg == T, return(t(z)), return(t(z[1:dim(z)[1]-1, 1:dim(z)[2]-1])))

}

}

## example

x = "Hello new, new world, this is one of my nice text documents - I wrote it today"

y = "Good bye old, old world, this is a nicely and well written text document"

wordcount(x, y, stem = T, minlen = 3, marg = T)

Follow-Up:

Thanks a lot for the comments! As I'm not that much into text mining I was trying to reinvent the wheel (in a rather dilettante manner) - missing the capabilities of existing packages. Here's the shortest code that I was able to find doing the same thing (with the potential to get out much more of it, if desired).x = "Hello new, new world, this is one of my nice text documents"

y = "Good bye old, old world, this is a text document"

z = "Good bye old, old world, this is a text document with WORDS for STEMMING - BTW, what is the stem of irregular verbs like write, wrote, written?"

# make a corpus with two or more documents (the cool thing here is that it could be endless (almost) numbers

# of documents to be cross tabulated with the used terms. And the control function enables you

# to do lots of tricks with it before it will be tabulated, see ?termFreq, i.e.)

xyz <- as.list(c(x,y,z))

xyz_corp <- Corpus(VectorSource(xyz))

cntr <- list(removePunctuation = T, stemming = T, wordLengths = c(3, Inf))

as.matrix(TermDocumentMatrix(xyz_corp, control = cntr))

20 Jun 2013

Spatial Overlays with R - Retrieving Polygon Attributes for a Set of Points

A short tutorial for spatial overlays using R-GIS..

library(sp)

library(dismo)

# spatial data (political districts of Austria)

gadm <- getData('GADM', country = "AT", level = 2)

# view

plot(gadm)

# some addresses

pts <- geocode(c("Aldrans, Grubenweg", "Wien, Stephansdom", "Salzburg, Mozartplatz"))

# make pts spatial

coords <- SpatialPoints(pts[, c("longitude", "latitude")])

spdf_pts <- SpatialPointsDataFrame(coords, pts)

# assign CRS/projection (which is WGS 1984)

crs <- CRS(" +proj=longlat +datum=WGS84 +ellps=WGS84 +towgs84=0,0,0")

proj4string(spdf_pts) <- crs

# check data

str(spdf_pts)

str(gadm)

# plot pts on top

plot(spdf_pts, cex = 2, col = 2, add = T)

# do an intersection (points in polygon)

# yielding the polygon's attribute data

over(spdf_pts, gadm)

18 Jun 2013

R GIS: Terrain Analysis for Polygons as Simple as it Gets!

library(rgdal)

library(raster)

alt <- getData('alt', country = "AT")

gadm <- getData('GADM', country = "AT", level = 2)

gadm_sub <- gadm[sample(1:length(gadm), 5), ]

plot(alt)

plot(gadm_sub, add=T)

asp <- terrain(alt, opt = "aspect", unit = "degrees", df = F)

slo <- terrain(alt, opt = "slope", unit = "degrees", df = F)

> extract(slo, gadm_sub, fun = mean, na.rm = T, small = T, df = T)

ID slope

1 1 9.959053

2 2 1.047443

3 3 7.456165

4 4 1.673786

5 5 11.946553

> extract(asp, gadm_sub, fun = mean, na.rm = T, small = T, df = T)

ID aspect

1 1 170.8065

2 2 184.0130

3 3 190.7155

4 4 136.8953

5 5 205.2115

Use R to Bulk-Download Digital Elevation Data with 1" Resolution

Here's a little r-script to convenientely download high quality digital elevation data, i.e. for the Alps, from HERE:

p.s.: Also check raster::getData which pulls SRTM data at 90m resolution for a location / region!

require(XML)

dir.create("D:/GIS_DataBase/DEM/")

setwd("D:/GIS_DataBase/DEM/")

doc <- htmlParse("http://www.viewfinderpanoramas.org/dem3.html#alps")

urls <- paste0("http://www.viewfinderpanoramas.org", xpathSApply(doc,'//*/a[contains(@href,"/dem1/N4")]/@href'))

names <- gsub(".*dem1/(\\w+\\.zip)", "\\1", urls)

for (i in 1:length(urls)) download.file(urls[i], names[i])

# unzip all files in dir and delete them afterwards

sapply(list.files(pattern = "*.zip"), unzip)

unlink(list.files(pattern = "*.zip"))

p.s.: Also check raster::getData which pulls SRTM data at 90m resolution for a location / region!

21 May 2013

R Quick Tip: Shutdown Windows after Script Has Finished

Quite often I have long procedures running and want to do this over night. However, my computer would still be running all night after the script has finished. This is easily circumvented by the following lines that I put at the end of such a script:

# set working dir

# setwd("C:/Users/Kay/Desktop")

# long procedure:

for(i in 1:1e+5) {cat(i); cat("\n..................\n")}

d <- "something"

# save history

savehistory()

# and worspace

save.image()

# then shutdown after 240 s

system("C:/Windows/system32/shutdown.exe -f -s -t 240")

# this would abort the shutdown:

# system("C:/Windows/system32/shutdown.exe -a")

6 May 2013

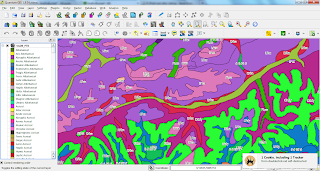

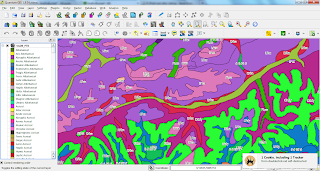

Creating a QGIS-Style (qml-file) with an R-Script

How to get from a txt-file with short names and labels to a QGIS-Style (qml-file)?

I used the below R-script to create a style for this legend table where I copy-pasted the parts I needed to a txt-file, like for the WRB-FULL (WRB-FULL: Full soil code of the STU from the World Reference Base for Soil Resources). The vector data to which I applied the style is freely available at ESDAC - you just need to submit a form to get access to the data. BTW, thanks to a helping hand on SO.

You can find the QGIS-styler script in theBioBucket-Repository on GitHub.

I used the below R-script to create a style for this legend table where I copy-pasted the parts I needed to a txt-file, like for the WRB-FULL (WRB-FULL: Full soil code of the STU from the World Reference Base for Soil Resources). The vector data to which I applied the style is freely available at ESDAC - you just need to submit a form to get access to the data. BTW, thanks to a helping hand on SO.

You can find the QGIS-styler script in theBioBucket-Repository on GitHub.

21 Apr 2013

Programmatically Download CORINE Land Cover Seamless Vector Data with R

Thanks to a helpful SO-Answer I was able to download all CLC vector data (43 zip-files) programmatically:

require(XML)

path_to_files <- "D:/GIS_DataBase/CorineLC/Seamless"

dir.create(path_to_files)

setwd(path_to_files)

doc <- htmlParse("http://www.eea.europa.eu/data-and-maps/data/clc-2006-vector-data-version-2")

urls <- xpathSApply(doc,'//*/a[contains(@href,".zip/at_download/file")]/@href')

# function to get zip file names

get_zip_name <- function(x) unlist(strsplit(x, "/"))[grep(".zip", unlist(strsplit(x, "/")))]

# function to plug into sapply

dl_urls <- function(x) try(download.file(x, get_zip_name(x), mode = "wb"))

# download all zip-files

sapply(urls, dl_urls)

# function for unzipping

try_unzip <- function(x) try(unzip(x))

# unzip all files in dir and delete them afterwards

sapply(list.files(pattern = "*.zip"), try_unzip)

# unlink(list.files(pattern = "*.zip"))

12 Apr 2013

Download File from Google Drive/Docs Programmatically with R

Following up my lattest posting on how to download files from the cloud with R..

dl_from_GoogleD <- function(output, key, format) {

## Arguments:

## output = output file name

## key = Google document key

## format = output format (pdf, rtf, doc, txt..)

## Note: File must be shareable!

require(RCurl)

bin <- getBinaryURL(paste0("https://docs.google.com/document/d/", key, "/export?format=", format),

ssl.verifypeer = FALSE)

con <- file(output, open = "wb")

writeBin(bin, con)

close(con)

message(noquote(paste(output, "read into", getwd())))

}

# Example:

dl_from_GoogleD(output = "dl_test.pdf",

key = "1DdauvkcVm5XtRBkQIv1na8PeLAwpCBdW8pALCFpRWeM",

format = "pdf")

shell.exec("dl_test.pdf")

library(RCurl)

setwd(tempdir())

destfile = "test_google_docs.csv"

x = getBinaryURL("https://docs.google.com/uc?export=download&id=0B2wAunwURQNsR0I0a0NlQUlJdzA", followlocation = TRUE, ssl.verifypeer = FALSE)

writeBin(x, destfile, useBytes = TRUE)

shell.exec(paste(tempdir(), "/test_google_docs.csv", sep = ""))

10 Apr 2013

Tweaking Movie Subtitles with R

I use R to fix subtitles that are not in sync with my movies. For the example below the subs were showing too early - so I added some time to each sequence in the srt file. For simplicity I used exactly 1 second in the below example.

You'll see that I use my function dl_from_dropbox(), on which I wrote a post previously, to get the example file!

You'll see that I use my function dl_from_dropbox(), on which I wrote a post previously, to get the example file!

Download Files from Dropbox Programmatically with R

Here is a usefull snippet that I stole from qdap::url_dl to download files from my Dropbox to the working directory.

Argument x is the document name and d the document key.

Argument x is the document name and d the document key.

dl_from_dropbox <- function(x, key) {

require(RCurl)

bin <- getBinaryURL(paste0("https://dl.dropboxusercontent.com/s/", key, "/", x),

ssl.verifypeer = FALSE)

con <- file(x, open = "wb")

writeBin(bin, con)

close(con)

message(noquote(paste(x, "read into", getwd())))

}

# Example:

dl_from_dropbox("GViewer_Embeds.txt", "06fqlz6gswj80nj")

shell.exec("GViewer_Embeds.txt")

22 Dec 2012

Convert OpenStreetMap Objects to KML with R

A quick geo-tip:

With the osmar and maptools package you can easily pull an OpenStreetMap object and convert it to KML, like below (thanks to adibender helping out on SO). I found the relation ID by googling for it (www.google.at/search?q=openstreetmap+relation+innsbruck).

With the osmar and maptools package you can easily pull an OpenStreetMap object and convert it to KML, like below (thanks to adibender helping out on SO). I found the relation ID by googling for it (www.google.at/search?q=openstreetmap+relation+innsbruck).

# get OSM data

library(osmar)

library(maptools)

innsbruck <- get_osm(relation(113642), full = T)

sp_innsbruck <- as_sp(innsbruck, what = "lines")

# convert to KML

for( i in seq_along(sp_innsbruck) ) {

kmlLine(sp_innsbruck@lines[[i]], kmlfile = "innsbruck.kml",

lwd = 3, col = "blue", name = "Innsbruck")

}

shell.exec("innsbruck.kml")

16 Dec 2012

Taxonomy with R: Exploring the Taxize-Package

First off, I'd really like to give a shout-out to the brave people who have created and maintain this great package - the fame is yours!

First off, I'd really like to give a shout-out to the brave people who have created and maintain this great package - the fame is yours!So, while exploring the capabilities of the package some issues with the ITIS-Server arose and with large datasets things weren't working out quite well for me.

I then switched to the NCBI API and saw that the result is much better here (way quicker, on first glance also a higher coverage).

At the time there is no taxize-function that will pull taxonomic details from a classification returned by NCBI, that's why I plugged together a little wrapper - see here:

# some species data:

spec <- data.frame("Species" = I(c("Bryum schleicheri", "Bryum capillare", "Bryum argentum", "Escherichia coli", "Glis glis")))

spl <- strsplit(spec$Species, " ")

spec$Genus <- as.character(sapply(spl, "[[", 1))

# for pulling taxonomic details we'd best submit higher rank taxons

# in this case Genera. Then we'll submit Genus Bryum only once and

# save some computation time (might be an issue if you deal

# with large datasets..)

gen_uniq <- unique(spec$Genus)

# function for pulling classification details ("phylum" in this case)

get_sys_level <- function(x){ require(taxize)

a <- classification(get_uid(x))

y <- data.frame(a[[1]]) # if there are multiple results, take the first..

z <- tryCatch(as.character(y[which(y[,2] == "phylum"), 1]), # in case of any other errors put NA

error = function(e) NA)

z <- ifelse(length(z) != 0, z, NA) # if the taxonomic detail is not covered return NA

return(data.frame(Taxon = x, Syslevel = z))

}

# call function and rbind the returned values

result <- do.call(rbind, lapply(gen_uniq, get_sys_level))

print(result)

# Taxon Syslevel

# 1 Bryum Streptophyta

# 2 Escherichia Proteobacteria

# 3 Glis Chordata

# now merge back to the original data frame

spec_new <- merge(spec, result, by.x = "Genus", by.y = "Taxon")

print(spec_new)

# Genus Species Syslevel

# 1 Bryum Bryum schleicheri Streptophyta

# 2 Bryum Bryum capillare Streptophyta

# 3 Bryum Bryum argentum Streptophyta

# 4 Escherichia Escherichia coli Proteobacteria

# 5 Glis Glis glis Chordata

#

28 Nov 2012

So, What Are You? ..A Plant? ..An Animal? -- Nope, I'm a Fungus!

Lately I had a list of about 1000 species names and I wanted to filter out only the plants as that is where I come from. I knew that Scott Chamberlain has put together the ritis package which obviously can do such things. However, I knew of ITIS before and was keen to give it a shot..

Here's what I've come up with (using the ITIS API, updated on 11. Dec 2012, previous version had a flaw with indefinite matches.. Should be ok now. However, there are of course species that are not covered by the database, i.e. Ixodes, see below):

library(XML)

get_tsn <- function(sp_name) {

require(XML)

units <- tolower(unlist(strsplit(sp_name, " ")))

# valid string?

if (length(units) > 2) { stop("...No valid search string submitted (two words seperated by one space)!") }

itis_xml <- htmlParse(paste("http://www.itis.gov/ITISWebService/services/ITISService/searchByScientificName?srchKey=",

sp_name, sep=""))

tsn <- xpathSApply(itis_xml, "//tsn", xmlValue)

unitname1 <- tolower(gsub("\\s+", "", xpathSApply(itis_xml, "//unitname1", xmlValue)))

unitname2 <- tolower(gsub("\\s+", "", xpathSApply(itis_xml, "//unitname2", xmlValue)))

unitname3 <- tolower(gsub("\\s+", "", xpathSApply(itis_xml, "//unitname3", xmlValue)))

# sp_name = only Genus, get tsn were sp_name matches perfectly and unitname2 (lower level taxon) is absent

if (length(units) == 1) {

return(tsn[tolower(sub("\\s+", "", unitname1)) == tolower(sp_name) & unitname2 == ""]) }

# sp_name = Genus and Epitheton, get tsn where both match perfectly and unitname3 (lower level taxon) is absent

if (length(units) == 2) {

return(tsn[unitname1 == units[1] &

unitname2 == units[2] &

nchar(unitname3) == 0]) }

}

get_kngdm <- function(tsn) {

kngdm <- xpathSApply(htmlParse(paste("http://www.itis.gov/ITISWebService/services/ITISService/getKingdomNameFromTSN?tsn=",

tsn, sep="")),

"//kingdomname", xmlValue)

return(kngdm)

}

get_tsn_kngdm <- function(x) {y = get_tsn(x)

z = get_kngdm(y)

return(list(Name = x, TSN = y, Kingdom = z))

}

# I had some API-related errors (I guess it was mysteriously not answering in

# some cases). I couldn't resolve this and thus implemented tryCatch

get_tsn_kngdm_try <- function(x) tryCatch(get_tsn_kngdm(x), error = function(e) NULL)

sp_names <- c("Clostridium", "Physcia", "Ixodes", "LYNX", "Homo sapiens", "Canis lupus")

system.time(result <- data.frame(do.call(rbind, lapply(sp_names, FUN = get_tsn_kngdm_try))))

result

system.time(result <- data.frame(do.call(rbind, lapply(sp_names, FUN = get_tsn_kngdm_try))))

#

# result

# User System verstrichen

# 1.54 0.01 33.66

# Name TSN Kingdom

# 1 Clostridium 555645 Monera

# 2 Physcia 14024 Fungi

# 3 Viola 22030 Plantae

# 4 Ixodes

# 5 LYNX 180581 Animalia

# 6 Homo sapiens 180092 Animalia

# 7 Canis lupus 180596 Animalia

#

29 Sept 2012

Merging Dataframes by Partly Matching String

The latest posting by Tony Hirst sparked my attention because I was thinking about a very similar issue recently.

I was also fiddling around with agrep and adist until I realised that for this very issue matching of substrings is not as important as matching multiple words.. With this different approach I quite easily matched all but 3 countries.

See what I did:

Now, expanding on this concept by introduction of partial matching would most propably result in a 100% match...

I was also fiddling around with agrep and adist until I realised that for this very issue matching of substrings is not as important as matching multiple words.. With this different approach I quite easily matched all but 3 countries.

See what I did:

## look up matches of one dataframe in another dataframe.

## the strings to be matched are comprised of 1 or more words

## and seperated by white space.

## method: match strings that have the highest fraction of words that match up

d1 <- read.csv("http://s.telegraph.co.uk/graphics/conrad/PercentageUsingTheNet.csv",

header = T, sep = ",", encoding = "UTF-8")

d2 <- read.csv("http://www.iso.org/iso/country_names_and_code_elements_txt",

header = T, sep = ";", encoding = "UTF-8")

## strings to be compared d1$ECONOMY and d2$Country.Name

mystr.1 <- as.character(d1$ECONOMY)

mystr.2 <- as.character(d2$Country.Name)

mystr.3 <- as.character(d2$ISO.3166.1.alpha.2.code)

## remove punctuation and multiple spaces

mystr.1 <- tolower(gsub("[^[:alnum:][:space:]]", "", mystr.1))

mystr.1 <- gsub("\\s+", " ", mystr.1)

mystr.2 <- tolower(gsub("[^[:alnum:][:space:]]", "", mystr.2))

mystr.2 <- gsub("\\s+", " ", mystr.2)

## function that finds matching words in string (words seperated by single space!)

n.wordshared <- function(x, y) {

sum(!is.na(match(unlist(strsplit(x, " ")),

unlist(strsplit(y, " ")))

)

)

}

## example

n.wordshared(x = "hello world", y = "good bye world")

## [1] 1

## function that calculates fraction of shared words

fr.wordshared <- function(x, y) {

n.wordshared(x, y) / (length(unique(unlist(strsplit(x, " "))))

+ length(unique(unlist(strsplit(y, " ")))))

}

## example

fr.wordshared(x = "hello world", y = "good bye world")

## [1] 0.2

mydf <- data.frame(str1 = mystr.1, mymatch = "", match.iso = "",

stringsAsFactors = F)

## now look up every element of string 1 in string 2

## and if there are matching words assign match to dataframe

for (i in 1:nrow(mydf)) {

xx <- sapply(mystr.2, fr.wordshared, y = mystr.1[i])

if (sum(xx) == 0) {

mydf$mymatch[i] <- NA

mydf$match.iso[i] <- NA

} else {

mydf$mymatch[i] <- paste(names(which(xx == max(xx))), collapse = "; ")

mydf$match.iso[i] <- paste(mystr.3[as.integer(which(xx == max(xx)))], collapse = "; ")

}

}

## see result

print(mydf)

## these are the multiple matches

(aa <- mydf[grep(";", mydf$mymatch), ])

##

## str1 mymatch match.iso

## 28 slovak republic czech republic; dominican republic CZ; DO

## these were not matched

(bb <- mydf[is.na(mydf$mymatch), ])

## str1 mymatch match.iso

##

## 61 russia NA NA

## 108 syria NA NA

Now, expanding on this concept by introduction of partial matching would most propably result in a 100% match...

28 Sept 2012

Reading and Text Mining a PDF-File in R

I just added this R-script that reads a PDF-file to R and does some text mining with it to my Github repo..

I just added this R-script that reads a PDF-file to R and does some text mining with it to my Github repo..6 Sept 2012

Get Long-Term Climate Data from KNMI Climate Explorer

You can query global climate data from the KNMI Climate Explorer (the KNMI is the Royal Netherlands Metereological Institute) with R.

Here's a little example how I retreived data for my hometown Innsbruck, Austria and plotted annual total precipitation. You can choose station data by pointing at a map, by setting coordinates, etc.

Here's a little example how I retreived data for my hometown Innsbruck, Austria and plotted annual total precipitation. You can choose station data by pointing at a map, by setting coordinates, etc.

31 Aug 2012

Follow-Up: Making a Word Cloud for a Search Result from GScholar_Scraper_3.1

Here's a short follow-up on how to produce a word cloud for a search result from GScholarScraper_3.1:

Here's a short follow-up on how to produce a word cloud for a search result from GScholarScraper_3.1:# File-Name: GScholarScraper_3.1.R

# Date: 2012-08-22

# Author: Kay Cichini

# Email: kay.cichini@gmail.com

# Purpose: Scrape Google Scholar search result

# Packages used: XML

# Licence: CC BY-SA-NC

#

# Arguments:

# (1) input:

# A search string as used in Google Scholar search dialog

#

# (2) write:

# Logical, should a table be writen to user default directory?

# if TRUE ("T") a CSV-file with hyperlinks to the publications will be created.

#

# Difference to version 3:

# (3) added "since" argument - define year since when publications should be returned..

# defaults to 1900..

#

# (4) added "citation" argument - logical, if "0" citations are included

# defaults to "1" and no citations will be included..

# added field "YEAR" to output

#

# Caveat: if a submitted search string gives more than 1000 hits there seem

# to be some problems (I guess I'm being stopped by Google for roboting the site..)

#

# And, there is an issue with this error message:

# > Error in htmlParse(URL):

# > error in creating parser for http://scholar.google.com/scholar?q

# I haven't figured out his one yet.. most likely also a Google blocking mechanism..

# Reconnecting / new IP-address helps..

GScholar_Scraper <- function(input, since = 1900, write = F, citation = 1) {

require(XML)

# putting together the search-URL:

URL <- paste("http://scholar.google.com/scholar?q=", input, "&as_sdt=1,5&as_vis=",

citation, "&as_ylo=", since, sep = "")

cat("\nThe URL used is: ", "\n----\n", paste("* ", "http://scholar.google.com/scholar?q=", input, "&as_sdt=1,5&as_vis=",

citation, "&as_ylo=", since, " *", sep = ""))

# get content and parse it:

doc <- htmlParse(URL)

# number of hits:

h1 <- xpathSApply(doc, "//div[@id='gs_ab_md']", xmlValue)

h2 <- strsplit(h1, " ")[[1]][2]

num <- as.integer(sub("[[:punct:]]", "", h2))

cat("\n\nNumber of hits: ", num, "\n----\n", "If this number is far from the returned results\nsomething might have gone wrong..\n\n", sep = "")

# If there are no results, stop and throw an error message:

if (num == 0 | is.na(num)) {

stop("\n\n...There is no result for the submitted search string!")

}

pages.max <- ceiling(num/100)

# 'start' as used in URL:

start <- 100 * 1:pages.max - 100

# Collect URLs as list:

URLs <- paste("http://scholar.google.com/scholar?start=", start, "&q=", input,

"&num=100&as_sdt=1,5&as_vis=", citation, "&as_ylo=", since, sep = "")

scraper_internal <- function(x) {

doc <- htmlParse(x, encoding="UTF-8")

# titles:

tit <- xpathSApply(doc, "//h3[@class='gs_rt']", xmlValue)

# publication:

pub <- xpathSApply(doc, "//div[@class='gs_a']", xmlValue)

# links:

lin <- xpathSApply(doc, "//h3[@class='gs_rt']/a", xmlAttrs)

# summaries are truncated, and thus wont be used..

# abst <- xpathSApply(doc, '//div[@class='gs_rs']', xmlValue)

# ..to be extended for individual needs

options(warn=(-1))

dat <- data.frame(TITLES = tit, PUBLICATION = pub,

YEAR = as.integer(gsub(".*\\s(\\d{4})\\s.*", "\\1", pub)),

LINKS = lin)

options(warn=0)

return(dat)

}

result <- do.call("rbind", lapply(URLs, scraper_internal))

if (write == T) {

result$LINKS <- paste("=Hyperlink(","\"", result$LINKS, "\"", ")", sep = "")

write.table(result, "GScholar_Output.CSV", sep = ";",

row.names = F, quote = F)

shell.exec("GScholar_Output.CSV")

} else {

return(result)

}

}

# EXAMPLE:

input <- "allintitle:amphibian+diversity"

df <- GScholar_Scraper(input, since = 1980, citation = 1)

#install.packages("tm")

library(tm)

#install.packages("wordcloud")

library(wordcloud)

corpus <- Corpus(VectorSource(df$TITLES))

corpus <- tm_map(corpus, function(x)removeWords(x, c(stopwords(), "PDF", "B", "DOC", "HTML", "BOOK", "CITATION")))

corpus <- tm_map(corpus, removePunctuation)

tdm <- TermDocumentMatrix(corpus)

m <- as.matrix(tdm)

v <- sort(rowSums(m), decreasing = TRUE)

d <- data.frame(word = names(v), freq = v)

# remove numbers from strings:

d <- d[-grep("[0-9]", d$word), ]

# print wordcloud:

wordcloud(d$word, d$freq)

25 Aug 2012

Toy Example with GScholarScraper_3.1

A commentator on my blog brought up this nice idea of how to use the GScholarScraper function for bibliometrics..

A commentator on my blog brought up this nice idea of how to use the GScholarScraper function for bibliometrics..I altered the code a little bit which enables to set a year since when results should be returned and added a field to the output collecting the year of publication. With this you can simply do something like this:

input <- "intitle:metapopulation"

df <- GScholar_Scraper(input, since = 1980, citation = 1)

nrow(df)

hist(df$YEAR, xlab = "Year",

main = "Frequency of Publications with\n\"METAPOPULATION\" in Title")22 Aug 2012

Web-Scraper for Google Scholar Updated!

I have updated the Google Scholar Web-Scraper Function GScholarScaper_2 to GScholarScraper_3 (and GScholarScaper_3.1) as it was outdated due to changes in the Google Scholar html-code. The new script is more slender and faster. It returns a dataframe or optionally a CSV-file with the titles, authors, publications & links. Feel free to report bugs, etc.

Update 11-07-2013: bug fixes due to google scholar code changes - https://github.com/gimoya/theBioBucket-Archives/blob/master/R/Functions/GScholarScraper_3.2.R. Note that since lately your IP will be blocked by Google at about the 1000th search result (cumulated) - so there's not much fun when you want to do some extensive bibliometrics..

Update 11-07-2013: bug fixes due to google scholar code changes - https://github.com/gimoya/theBioBucket-Archives/blob/master/R/Functions/GScholarScraper_3.2.R. Note that since lately your IP will be blocked by Google at about the 1000th search result (cumulated) - so there's not much fun when you want to do some extensive bibliometrics..

11 Aug 2012

Tcl/Tk GUI Example with Variable Input by User

I recently used R with GUI-elements for the first time and browsed through the available online resources, but I didn't quite find what I was searching for: The user should be able to put in some variables and call a function with a button. In the end I did it with a little help from SO. Here is the working example that I eventually plugged together:

29 Jun 2012

Use IUCN API with R & XPath

Thanks to a posting on R-sig-eco mailing list I learned of the IUCN-API. Here's a simple example for what can be done with it (output as pdf is HERE):

Thanks to a posting on R-sig-eco mailing list I learned of the IUCN-API. Here's a simple example for what can be done with it (output as pdf is HERE):25 Jun 2012

Avoid Overplotting of Text in Ordination Diagram

Referring to a recent posting on r-sig-eco mailing list I'll add this example to theBioBucket:

library(vegan)

library(vegan)

data(dune)

sol <- metaMDS(dune)

# use ordipointlabel -

# here is an example where I added cex according to species frequencies:

plot(sol, type = "n")

cex.lab = colSums(dune > 0) / nrow(dune) + 1

col.lab = rgb(0.2, 0.5, 0.4, alpha = 0.6)

ordipointlabel(sol, displ = "sp", col = col.lab, cex = cex.lab)

# you could also use pointLabel() from maptools package:

library(maptools)

x = as.vector(sol$species[,1])

y = as.vector(sol$species[,2])

w = row.names(sol$species)

plot(sol, type = "n")

points(sol, displ = "species", cex = 1, pch = 4, col = 3)

pointLabel(x, y, w, col = col.lab, cex = cex.lab)19 Jun 2012

A Wrapper Function for Instant Package Installation / Loading

Since library() and require() only accept input with length(input) = 1 it is necessary to make repeated calls - this can be quite annoying.. So, HERE is a little wrapper function for convenient package installation / loading. It installs packages if they are missing and loads them if there were not loaded yet. I have put it to my RProfile.site so I can conveniently install / load packages with only one call and am quite content with it..

11 Jun 2012

FloraWeb Plant Species Report via R

For German-spoken users I added the function floraweb_scrape.R that allows you to conveniently collect species data and print to a PDF-file (see this example output). The function accesses data provided by the web-site FloraWeb.de (BfN - Bundesministerium für Naturschutz).

You can use it as an interactive version (RTclTk) which I have put to a Github repository HERE.

Preview:

You can use it as an interactive version (RTclTk) which I have put to a Github repository HERE.

Preview:

14 May 2012

Source R-Script from Dropbox

A quick tip on how to source R-scripts from a Dropbox-account:

(1) Upload the script..

(2) Get link with the "get link" option. The link should look like "https://www.dropbox.com/s/XXXXXX/yourscript.R"..

(3) Grab this part "XXXXXX/yourscript.R" and paste it to "http://dl.dropbox.com/s/"..

(4) the final URL that can be sourced:

EDIT, March 2013:

This method is not working anymore. You can use the following approach instead:

(1) Upload the script..

(2) Get link with the "get link" option. The link should look like "https://www.dropbox.com/s/XXXXXX/yourscript.R"..

(3) Grab this part "XXXXXX/yourscript.R" and paste it to "http://dl.dropbox.com/s/"..

(4) the final URL that can be sourced:

source("http://dl.dropbox.com/s/XXXXXX/yourscript.R")source("http://dl.dropbox.com/s/c18lcwnnrodsevt/test_dropbox_source.R")

EDIT, March 2013:

This method is not working anymore. You can use the following approach instead:

library(RCurl)

setwd(tempdir())

destfile = "test.txt"

x = getBinaryURL("https://dl.dropbox.com/u/68286640/test_dropbox_source.R", followlocation = TRUE, ssl.verifypeer = FALSE)

writeBin(x, destfile, useBytes = TRUE)

source(paste(tempdir(), "/test.txt", sep = ""))

# remove files from tempdir:

unlink(dir())

2 May 2012

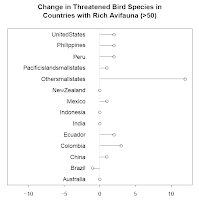

knitr-Example: Use World Bank Data to Generate Report for Threatened Bird Species

I'll use the below script that retrieves data for threatened bird species from the World Bank via its API and does some processing, plotting and analysis. There is a package (WDI) that allows you to access the data easily.

BTW, for simplicity I use knitr::stitch with its default template...

You should get something like THIS PDF.

EDIT, MARCH 2013

OUTDATED! you can use this approach instead:

# world bank indicators for species -

# I'll check bird species:

code <- as.character(WDIsearch("bird")[1,1])

bird_data <- WDI(country="all", indicator=code, start=2010, end=2012)

# remove NAs and select values in the range 50 - 1000:

bird_data_sub <- bird_data[!is.na(bird_data$EN.BIR.THRD.NO)&

bird_data$EN.BIR.THRD.NO < 1000&

bird_data$EN.BIR.THRD.NO > 50, ]

# change in numbers across years 2010 and 2011:

change.no <- aggregate(EN.BIR.THRD.NO ~ country, diff,

data = bird_data_sub)

# plot:

par(mar = c(3, 3, 5, 1))

plot(x = change.no[,2], y = 1:nrow(change.no),

xlim = c(-12, 12), xlab = "", ylab = "",

yaxt = "n")

abline(v = 0, lty = 2, col = "grey80")

title(main = "Change in Threatened Bird Species in\nCountries with Rich Avifauna (>50)")

text(y = 1:nrow(change.no),

x = -2, adj = 1,

labels = change.no$country)

segments(x0 = 0, y0 = 1:nrow(change.no),

x1 = change.no[, 2], y1 = 1:nrow(change.no))

# test hypothesis that probability of species decrease is

# equal to probability of increase:

binom.test(sum(change.no < 0), sum(change.no != 0))

stitch("http://dl.dropbox.com/s/ga0qbk1o17n17jj/Change_threatened_species.R")..this is one line of code - can you dig it?..BTW, for simplicity I use knitr::stitch with its default template...

You should get something like THIS PDF.

EDIT, MARCH 2013

OUTDATED! you can use this approach instead:

library(knitr); library(RCurl); library(WDI)

destfile = "script.txt"

x = getBinaryURL("https://dl.dropbox.com/s/ga0qbk1o17n17jj/Change_threatened_species.R", followlocation = TRUE, ssl.verifypeer = FALSE)

writeBin(x, destfile, useBytes = TRUE)

source(paste(tempdir(), "/script.txt", sep = ""))

stitch(paste(tempdir(), "/script.txt", sep = ""))

Playing with knitr: Create Report with Dynamic List

Here is a little toy example using knitr, LaTeX/MiKTeX and Google Docs.

Say you had a list on Google Docs (say a list of attendants) and you want to print a report with it..

Then see this example using this Rnw-file and the output...

make the tex-file with:

or with this shortcut:

Say you had a list on Google Docs (say a list of attendants) and you want to print a report with it..

Then see this example using this Rnw-file and the output...

make the tex-file with:

library(knitr)

knit("knitr_list_of_attendants.Rnw")

or with this shortcut:

knit2pdf("knitr_list_of_attendants.Rnw")

browseURL("knitr_list_of_attendants.pdf")

1 May 2012

Quick Tip: Replace Values in Dataframe on Condition with Random Numbers

This one took me some time - though, in fact it is plain simple:

> options(scipen=999)

> (my_df <- data.frame(matrix(sample(c(0,1), 100, replace = T), 10, 10)))

X1 X2 X3 X4 X5 X6 X7 X8 X9 X10

1 0 0 1 0 1 1 1 1 0 1

2 0 0 1 1 1 0 0 0 0 0

3 0 1 1 0 0 1 1 0 0 1

4 1 1 0 0 0 0 0 0 0 0

5 0 0 1 1 1 0 0 1 1 1

6 0 0 1 1 0 0 1 1 0 1

7 0 0 1 1 1 0 1 1 1 1

8 1 1 1 0 0 0 0 1 1 0

9 0 0 0 0 1 0 1 0 1 0

10 1 1 1 1 1 0 1 1 0 1

> my_df[my_df == 0] <- runif(sum(my_df==0), 0, 0.001)

> my_df

X1 X2 X3 X4 X5 X6 X7 X8

1 0.000268 0.000926 1.000000 2.00e-05 1.000000 1.00e+00 1.00e+00 1.00e+00

2 0.000531 0.000882 1.000000 1.00e+00 1.000000 4.66e-04 3.96e-04 6.70e-04

3 0.000785 1.000000 1.000000 5.03e-04 0.000164 1.00e+00 1.00e+00 2.98e-04

4 1.000000 1.000000 0.000336 8.71e-04 0.000770 7.44e-05 6.49e-05 1.01e-04

5 0.000168 0.000674 1.000000 1.00e+00 1.000000 6.49e-04 2.26e-04 1.00e+00

6 0.000404 0.000950 1.000000 1.00e+00 0.000735 7.59e-04 1.00e+00 1.00e+00

7 0.000472 0.000516 1.000000 1.00e+00 1.000000 1.37e-04 1.00e+00 1.00e+00

8 1.000000 1.000000 1.000000 6.30e-06 0.000972 3.97e-04 5.46e-05 1.00e+00

9 0.000868 0.000577 0.000347 7.21e-05 1.000000 2.25e-04 1.00e+00 7.19e-05

10 1.000000 1.000000 1.000000 1.00e+00 1.000000 5.80e-05 1.00e+00 1.00e+00

X9 X10

1 0.000880 1.00e+00

2 0.000754 7.99e-04

3 0.000817 1.00e+00

4 0.000982 7.85e-04

5 1.000000 1.00e+00

6 0.000104 1.00e+00

7 1.000000 1.00e+00

8 1.000000 9.43e-06

9 1.000000 7.79e-04

10 0.000099 1.00e+00

25 Apr 2012

Reproducible Research: Running odfWeave with 7-zip

odfWeave is an R-package that is used for making dynamic reports by Sweave processing of Open Document Format (ODF) files. For anyone new to report generation and lacking knowledge of markup languages this might be a good starting point or even a true alternative to sweave / LATEX and others.

Now, anyone who recently tried to install the required zipping program for odfWeave might have noticed that there are currently no info-zip executables available (zip and unzip by info-zip software are suggested in the odfWeave manual). There are several other free zipping programs - but if you use these the default syntax for odfWeave changes. Looking into the internals it is revealed that the OS command specified for running the zipping program has to be adapted. There are some postings on the R-help mailing list concerning these topic, but none of them worked for me. After some trial and error I managed to get around this problem by using 7-zip with an adapted syntax and will share this here:

Now, anyone who recently tried to install the required zipping program for odfWeave might have noticed that there are currently no info-zip executables available (zip and unzip by info-zip software are suggested in the odfWeave manual). There are several other free zipping programs - but if you use these the default syntax for odfWeave changes. Looking into the internals it is revealed that the OS command specified for running the zipping program has to be adapted. There are some postings on the R-help mailing list concerning these topic, but none of them worked for me. After some trial and error I managed to get around this problem by using 7-zip with an adapted syntax and will share this here:

# write an in-file and save it to a folder:

dir()

[1] "Example_1_in.odt"

# testing the :

system("\"C:\\Program Files\\7-Zip\\7z.exe\" t -tzip Example_1_in.odt")

7-Zip 9.20 Copyright (c) 1999-2010 Igor Pavlov 2010-11-18

Processing archive: Example_1_in.odt

Testing mimetype

Testing Configurations2\statusbar

Testing Configurations2\accelerator\current.xml

Testing Configurations2\floater

Testing Configurations2\popupmenu

Testing Configurations2\progressbar

Testing Configurations2\menubar

Testing Configurations2\toolbar

Testing Configurations2\images\Bitmaps

Testing content.xml

Testing manifest.rdf

Testing styles.xml

Testing meta.xml

Testing Thumbnails\thumbnail.png

Testing settings.xml

Testing META-INF\manifest.xml

Everything is Ok

Folders: 7

Files: 9

Size: 30139

Compressed: 9572

# setting up cmd prompt:

library(odfWeave)

ctrl <- odfWeaveControl(zipCmd =

c("\"C:\\Program Files\\7-Zip\\7z.exe\" a $$file$$",

"\"C:\\Program Files\\7-Zip\\7z.exe\" x -tzip $$file$$"))

# running:

odfWeave("Example_1_in.odt", "Example_1_out.odt", control = ctrl)

Copying Example_1_in.odt

Setting wd to C:\Users\Kay\AppData\Local\Temp\RtmpghYef5/odfWeave2223195249

Unzipping ODF file using "C:\Program Files\7-Zip\7z.exe" x -tzip "Example_1_in.odt"

7-Zip 9.20 Copyright (c) 1999-2010 Igor Pavlov 2010-11-18

Processing archive: Example_1_in.odt

Extracting mimetype

Extracting Configurations2\statusbar

Extracting Configurations2\accelerator\current.xml

Extracting Configurations2\floater

Extracting Configurations2\popupmenu

Extracting Configurations2\progressbar

Extracting Configurations2\menubar

Extracting Configurations2\toolbar

Extracting Configurations2\images\Bitmaps

Extracting content.xml

Extracting manifest.rdf

Extracting styles.xml

Extracting meta.xml

Extracting Thumbnails\thumbnail.png

Extracting settings.xml

Extracting META-INF\manifest.xml

Everything is Ok

Folders: 7

Files: 9

Size: 30139

Compressed: 9572

Removing Example_1_in.odt

Creating a Pictures directory

Pre-processing the contents

Sweaving content.Rnw

Writing to file content_1.xml

Processing code chunks ...

'content_1.xml' has been Sweaved

Removing content.xml

Post-processing the contents

Removing content.Rnw

Removing styles.xml

Renaming styles_2.xml to styles.xml

Removing manifest.xml

Renaming manifest_2.xml to manifest.xml

Removing extra files

Packaging file using "C:\Program Files\7-Zip\7z.exe" a "Example_1_in.odt"

7-Zip 9.20 Copyright (c) 1999-2010 Igor Pavlov 2010-11-18

Scanning

Creating archive Example_1_in.odt

Compressing Configurations2\accelerator\current.xml

Compressing content.xml

Compressing manifest.rdf

Compressing META-INF\manifest.xml

Compressing meta.xml

Compressing mimetype

Compressing settings.xml

Compressing styles.xml

Compressing Thumbnails\thumbnail.png

Everything is Ok

Copying Example_1_in.odt

Resetting wd

Removing C:\Users\Kay\AppData\Local\Temp\RtmpghYef5/odfWeave2223195249

Done

# see the result:

dir()

[1] "Example_1_in.odt" "Example_1_out.odt"

6 Apr 2012

R-Bloggers' Web-Presence

We love them, we hate them: RANKINGS!

Rankings are an inevitable tool to keep the human rat race going. In this regard I'll pick up my last two posts (HERE & HERE) and have some fun with it by using it to analyse R-Bloggers' web presence. I will use number of hits in Google Search as an indicator.

I searched for URLs like this: https://www.google.com/search?q="http://www.twotorials.com" - meaning that only the exact blog-URL is searched.

When checking the results manually I discovered slight deviations in the numbers and admittedly have no clue why this is.. Sorry if any blog is under- overrepresented due to such an error - please report!

Here is the R-script:

Rankings are an inevitable tool to keep the human rat race going. In this regard I'll pick up my last two posts (HERE & HERE) and have some fun with it by using it to analyse R-Bloggers' web presence. I will use number of hits in Google Search as an indicator.

I searched for URLs like this: https://www.google.com/search?q="http://www.twotorials.com" - meaning that only the exact blog-URL is searched.

| Blogs | NoHits |

|---|---|

| http://google-opensource.blogspot.com | 82300 |

| http://www.programmingr.com | 73500 |

| http://googleresearch.blogspot.com | 58000 |

| http://dirk.eddelbuettel.com | 53000 |

| http://borasky-research.net | 33100 |

| http://casoilresource.lawr.ucdavis.edu | 32500 |

| http://andrewgelman.com | 30000 |

| http://yihui.name | 29600 |

| http://xianblog.wordpress.com | 27900 |

| http://nsaunders.wordpress.com | 27600 |

| http://chem-bla-ics.blogspot.com | 26600 |

| http://plindenbaum.blogspot.com | 24600 |

| http://blog.ouseful.info | 24300 |

| http://www.vcasmo.com | 24200 |

| http://yz.mit.edu | 23500 |

| http://romainfrancois.blog.free.fr | 22700 |

| http://blog.revolutionanalytics.com | 21000 |

| http://robjhyndman.com | 18400 |

| http://freakonometrics.blog.free.fr | 16100 |

| http://perfdynamics.blogspot.com | 15400 |

| http://www.stubbornmule.net | 14800 |

| http://zoonek.free.fr | 14800 |

| http://jackman.stanford.edu | 13900 |

| http://www.bytemining.com | 13700 |

| http://learnr.wordpress.com | 12600 |

| http://tommy.chheng.com | 12200 |

| http://mazamascience.com | 12000 |

| http://www.investuotojas.eu | 11500 |

| http://www.r-statistics.com | 11300 |

| http://www.franklincenterhq.org | 10800 |

| http://gettinggeneticsdone.blogspot.com | 10700 |

| http://mpastell.com | 9930 |

| http://pineda-krch.com | 9780 |

| http://blog.saush.com | 9220 |

| http://www.premiersoccerstats.com | 8950 |

| http://developmentality.wordpress.com | 7250 |

| http://www.dataspora.com | 7200 |

| http://blog.hiremebecauseimsmart.com | 7050 |

| http://isomorphismes.tumblr.com | 7040 |

| http://www.mathfinance.cn | 6930 |

| http://blog.nguyenvq.com | 6150 |

| http://www.drewconway.com | 5970 |

| http://www.carlboettiger.info | 5520 |

| http://www.statisticsblog.com | 5110 |

| http://www.decisionsciencenews.com | 4950 |

| http://www.r-chart.com | 4810 |

| http://chartsgraphs.wordpress.com | 4480 |

| http://www.portfolioprobe.com | 4410 |

| http://procomun.wordpress.com | 4330 |

| http://jeromyanglim.blogspot.com | 4080 |

| http://spatialanalysis.co.uk | 4080 |

| http://www.theresearchkitchen.com | 4080 |

| http://www.forex-bloggers.com | 4070 |

| https://www.rmetrics.org | 4050 |

| http://princeofslides.blogspot.com | 3900 |

| http://www.cybaea.net | 3740 |

| http://www.cerebralmastication.com | 3710 |

| http://ygc.name | 3670 |

| http://ryouready.wordpress.com | 3450 |

| http://jeffreybreen.wordpress.com | 3410 |

| http://systematicinvestor.wordpress.com | 3400 |

| http://sgsong.blogspot.com | 3310 |

| http://industrialengineertools.blogspot.com | 3290 |

| http://www.r-tutor.com | 3270 |

| http://fishlab.ucdavis.edu | 3270 |

| http://ggorjan.blogspot.com | 3250 |

| http://blog.ynada.com | 3220 |

| http://farmacokratia.blogspot.com | 3170 |

| http://4dpiecharts.com | 3130 |

| http://heuristically.wordpress.com | 3040 |

| http://blog.rtwilson.com | 2910 |

| http://www.wekaleamstudios.co.uk | 2890 |

| http://www.dataists.com | 2840 |

| http://ikanb.wordpress.com | 2750 |

| http://shape-of-code.coding-guidelines.com | 2730 |

| http://onertipaday.blogspot.com | 2710 |

| http://blog.fosstrading.com | 2700 |

| http://blog.echen.me | 2690 |

| http://www.theusrus.de | 2670 |

| http://cloudnumbers.com | 2630 |

| http://paulbutler.org | 2620 |

| http://biostatmatt.com | 2460 |

| http://www.johnmyleswhite.com | 2430 |

| http://dataninja.wordpress.com | 2360 |

| http://realizationsinbiostatistics.blogspot.com | 2340 |

| http://statisfaction.wordpress.com | 2300 |

| http://uxblog.idvsolutions.com | 2250 |

| http://timelyportfolio.blogspot.com | 2210 |

| http://radfordneal.wordpress.com | 2200 |

| http://sas-and-r.blogspot.com | 2200 |

| http://pairach.com | 2110 |

| http://yusung.blogspot.com | 2050 |

| http://blog.flacso.edu.mx | 2010 |

| http://www.rensenieuwenhuis.nl | 2000 |

| http://michaeldhealy.com | 1990 |

| http://freigeist.devmag.net | 1950 |

| http://www.fernandohrosa.com.br | 1920 |

| http://statbandit.wordpress.com | 1870 |

| http://www.win-vector.com | 1840 |

| http://lukemiller.org | 1830 |

| http://ropensci.org | 1720 |

| http://www.eggwall.com | 1650 |

| http://benmazzotta.wordpress.com | 1620 |

| http://bms.zeugner.eu | 1610 |

| http://cartesianfaith.wordpress.com | 1580 |

| http://linkedscience.org | 1570 |

| http://stevemosher.wordpress.com | 1550 |

| http://intelligenttradingtech.blogspot.com | 1520 |

| http://www.imachordata.com | 1480 |

| http://blog.diegovalle.net | 1470 |

| http://jermdemo.blogspot.com | 1430 |

| http://nortalktoowise.com | 1420 |

| http://ekonometrics.blogspot.com | 1340 |

| http://digitheadslabnotebook.blogspot.com | 1320 |

| http://flyordie.sin.khk.be | 1310 |

| http://schamberlain.github.com | 1230 |

| http://gribblelab.org | 1180 |

| http://www.quantf.com | 1130 |

| http://offensivepolitics.net | 1020 |

| http://www.markmfredrickson.com | 981 |

| http://blog.mckuhn.de | 948 |

| http://erehweb.wordpress.com | 889 |

| http://confounding.net | 886 |

| http://simplystatistics.tumblr.com | 875 |

| http://www.babelgraph.org | 859 |

| http://csgillespie.wordpress.com | 857 |

| http://joewheatley.net | 844 |

| http://helmingstay.blogspot.com | 843 |

| http://theaverageinvestor.wordpress.com | 825 |

| http://quantitative-ecology.blogspot.com | 785 |

| http://zvfak.blogspot.com | 776 |

| http://ucfagls.wordpress.com | 766 |

| http://opendatagroup.com | 760 |

| http://cameron.bracken.bz | 740 |

| http://rtutorialseries.blogspot.com | 738 |

| http://opencpu.org | 708 |

| http://novicemetrics.blogspot.com | 700 |

| http://lamages.blogspot.com | 680 |

| http://nir-quimiometria.blogspot.com | 679 |

| http://tonybreyal.wordpress.com | 677 |

| http://brokeringclosure.wordpress.com | 658 |

| http://socialdatablog.com | 643 |

| http://dancingeconomist.blogspot.com | 629 |

| http://www.rtexttools.com | 603 |

| http://danganothererror.wordpress.com | 589 |

| http://thebiobucket.blogspot.com | 567 |

| http://holtmeier.de | 531 |

| http://val-systems.blogspot.com | 519 |

| http://thelogcabin.wordpress.com | 489 |

| http://dcemri.blogspot.com | 484 |

| http://rdatamining.wordpress.com | 477 |

| http://bridgewater.wordpress.com | 460 |

| http://www.rcasts.com | 444 |

| http://dsparks.wordpress.com | 436 |

| http://pr.cloudst.at | 422 |

| http://polstat.org | 409 |

| http://www.compmath.com | 401 |

| http://techno-realism.blogspot.com | 399 |

| http://www.backsidesmack.com | 395 |

| http://geotheory.org | 393 |

| http://miraisolutions.wordpress.com | 367 |

| http://econometricsense.blogspot.com | 352 |

| http://blog.binfalse.de | 344 |

| http://rforcancer.drupalgardens.com | 317 |

| http://blog.rstudio.org | 316 |

| http://mcfromnz.wordpress.com | 309 |

| http://www.quantumforest.com | 309 |

| http://blog.quanttrader.org | 303 |

| http://chrisladroue.com | 293 |

| http://www.michaelbommarito.com | 289 |

| http://procrun.com | 280 |

| http://mikeksmith.posterous.com | 279 |

| http://bio7.org | 278 |

| http://kbroman.wordpress.com | 278 |

| http://martynplummer.wordpress.com | 272 |

| http://bryer.org | 268 |

| http://www.funjackals.com | 265 |

| http://www.harlan.harris.name | 252 |

| http://www.milktrader.net | 248 |

| http://www.surefoss.org | 241 |

| http://rigorousanalytics.blogspot.com | 231 |

| http://www.jameskeirstead.ca | 229 |

| http://programming-r-pro-bro.blogspot.com | 225 |

| http://plausibel.blogspot.com | 224 |

| http://statistic-on-air.blogspot.com | 217 |

| http://mintgene.wordpress.com | 212 |

| http://moderntoolmaking.blogspot.com | 205 |

| http://quantitativeecology.blogspot.com | 199 |

| http://www.sigmafield.org | 199 |

| http://www.ancienteco.com | 194 |

| http://worldofrcraft.blogspot.com | 191 |

| http://rappster.wordpress.com | 190 |

| http://stotastic.com | 189 |

| http://evolvingspaces.blogspot.com | 184 |

| http://strugglingthroughproblems.blogspot.com | 166 |

| http://sharpstatistics.co.uk | 161 |

| http://leftcensored.skepsi.net | 160 |

| http://omegahat.wordpress.com | 156 |

| http://drunks-and-lampposts.com | 155 |

| http://amathew.com | 152 |

| http://onlinelabor.blogspot.com | 147 |

| http://johnramey.net | 144 |

| http://gossetsstudent.wordpress.com | 138 |

| http://tomhopper.wordpress.com | 135 |

| http://ggobi.blogspot.com | 134 |

| http://blog.fellstat.com | 131 |

| http://www.openanalytics.eu | 130 |

| http://www.numbertheory.nl | 127 |

| http://stats.blogoverflow.com | 127 |

| http://the-praise-of-insects.blogspot.com | 122 |

| http://lpenz.github.com | 118 |

| http://christophergandrud.blogspot.com | 118 |

| http://f.giorlando.org | 112 |

| http://bayesianbiologist.com | 110 |

| http://www.graphoftheweek.org | 109 |

| http://oneliner.soma20.com | 109 |

| http://inundata.org | 107 |

| http://geokook.wordpress.com | 104 |

| http://blog.datapunks.com | 102 |

| http://eranraviv.com | 102 |

| http://eranraviv.com | 102 |

| http://www.compbiome.com | 101 |

| http://www.techpolicy.ca | 99 |

| http://www.psychwire.co.uk | 97 |

| http://blog.carlislerainey.com | 93 |

| http://vasishth-statistics.blogspot.com | 93 |

| http://www.statsravingmad.com | 93 |

| http://using-r-project.blogspot.com | 93 |

| http://www.nikhilgopal.com | 92 |

| http://thedatamonkey.blogspot.com | 92 |

| http://jeffreyhorner.tumblr.com | 90 |

| http://menugget.blogspot.com | 88 |

| http://www.twotorials.com | 88 |

| http://dataexcursions.wordpress.com | 84 |

| http://viksalgorithms.blogspot.com | 83 |

| http://exploringdatablog.blogspot.com | 81 |

| http://sachaepskamp.com | 81 |

| http://aphysicistinwallstreet.blogspot.com | 77 |

| http://lastresortsoftware.blogspot.com | 75 |

| http://www.nomad.priv.at | 72 |

| http://applyr.blogspot.com | 71 |

| http://www.knowledgediscovery.jp | 71 |

| http://weitaiyun.blogspot.com | 71 |

| http://xmphforex.wordpress.com | 71 |

| http://statsadventure.blogspot.com | 70 |

| http://davenportspatialanalytics.squarespace.com | 70 |

| http://anandram.wordpress.com | 69 |

| http://rpint.wordpress.com | 68 |

| http://datadebrief.blogspot.com | 66 |

| http://blog.cloudstat.org | 64 |

| http://www.r-podcast.org | 64 |

| http://rmkrug.wordpress.com | 62 |

| http://denishaine.wordpress.com | 61 |

| http://expansed.com | 58 |

| http://r.andrewredd.us | 57 |

| http://isseing333.blogspot.com | 57 |

| http://solomonmessing.wordpress.com | 57 |

| http://rtricks.wordpress.com | 57 |

| http://anrprogrammer.wordpress.com | 56 |

| http://arungaikwad.wordpress.com | 56 |

| http://geolabs.wordpress.com | 55 |

| http://lookingatdata.blogspot.com | 55 |

| http://factbased.blogspot.com | 54 |

| http://severity.blogspot.com | 54 |

| http://swordofcrom.wordpress.com | 53 |

| http://librestats.wordpress.com | 51 |

| http://marcinkula.wordpress.com | 51 |

| http://gsoc2010r.wordpress.com | 47 |

| http://psyccomputing.blogspot.com | 46 |

| http://fabiomarroni.wordpress.com | 45 |

| http://jedifran.com | 45 |

| http://alstatr.blogspot.com | 43 |

| http://r-video-tutorial.blogspot.com | 42 |

| http://alexfarquhar.posterous.com | 40 |

| http://bmb-common.blogspot.com | 40 |

| http://rdataviz.wordpress.com | 40 |

| http://mypapertrades.blogspot.com | 38 |

| http://pitchrx.blogspot.com | 38 |

| http://simonmueller.net | 38 |

| http://statisfactions.wordpress.com | 37 |

| http://nzprimarysectortrade.wordpress.com | 36 |

| http://seanmulcahy.blogspot.com | 36 |

| http://www.speakingstatistically.com | 35 |

| http://joshpaulson.wordpress.com | 34 |

| http://learningrbasic.blogspot.com | 34 |

| http://mockquant.blogspot.com | 33 |

| http://costaleconomist.blogspot.com | 32 |

| http://rsnippets.blogspot.com | 31 |

| http://statmethods.wordpress.com | 29 |

| http://aviadklein.wordpress.com | 28 |

| http://obeautifulcode.com | 28 |

| http://blog.cloudst.at | 24 |

| http://rstats.posterous.com | 23 |

| http://notebookonthewebs.tumblr.com | 22 |

| http://0utlier.blogspot.com | 21 |

| http://gjkerns.github.com | 21 |

| http://eigensomething.blogspot.com | 10 |

| http://brocktibert.wordpress.com | 9 |

| http://toddjobe.blogspot.com | 9 |

| http://mickeymousemodels.blogspot.com | 9 |

| http://forgetfulfunctor.blogspot.com | 9 |

| http://rocknrblog.wordpress.com | 9 |

| http://dmbates.blogspot.com | 8 |

| http://blog.nextbiomotif.com | 8 |

| http://indiacrunchin.wordpress.com | 8 |

| http://blog.trenthauck.com | 8 |

| http://mikescnc.blogspot.com | 8 |

| http://jeroldhaas.blogspot.com | 8 |

| http://tlevine.tumblr.com | 8 |

| http://empty-moon-9726.heroku.com | 8 |

| http://www.proc-x.com | 7 |

| http://jointposterior.blogspot.com | 7 |

| http://gastonsanchez.wordpress.com | 7 |

| http://mlt-thinks.blogspot.com | 7 |

| http://rstats.wordpress.com | 7 |

| http://playingwithr.blogspot.com | 7 |

| http://scottmutchler.blogspot.com | 6 |

| http://iamdata.wordpress.com | 6 |

| http://sfchaos.blogspot.com | 6 |

| http://nightlordtw.wordpress.com | 5 |

| http://pleasepasstheroc.blogspot.com | 5 |

| http://wiekvoet.blogspot.com | 5 |

| http://d7.stattler.com | 4 |

| http://yetanotherrblog.blogspot.com | 4 |

| http://blog.iwanluijks.nl:80 | 3 |

| https://rlearner.wordpress.com | 3 |

| http://margintale.blogspot.com | 1 |

When checking the results manually I discovered slight deviations in the numbers and admittedly have no clue why this is.. Sorry if any blog is under- overrepresented due to such an error - please report!

Here is the R-script:

require(XML)

library(stringr)

library(RCurl)

library(xtable)

GoogleHits.1 <- function(input)

{

url <- paste("https://www.google.com/search?q=\"",

input, "\"", sep = "")

CAINFO = paste(system.file(package="RCurl"), "/CurlSSL/ca-bundle.crt", sep = "")

script <- getURL(url, followlocation = TRUE, cainfo = CAINFO)

doc <- htmlParse(script)

res <- xpathSApply(doc, "//div[@id='subform_ctrl']/*", xmlValue)[[2]]

return(as.integer(gsub("[^0-9]", "", res)))

}

# Example:

GoogleHits.1("R%Statistical%Software")

###################### Begin get r-blogger's URLs: ###########################################

# get blogger urls with XML:

script <- getURL("www.r-bloggers.com")

doc <- htmlParse(script)

li <- getNodeSet(doc, "//ul[@class='xoxo blogroll']//a")

urls <- sapply(li, xmlGetAttr, "href")

# extract sensible blog urls:

# get ids for those with only 2 slashes (no 3rd in the end):

id <- which(nchar(gsub("[^/]", "", urls )) == 2)

slash_2 <- urls[id]

# find position of 3rd slash occurrence in strings:

slash_stop <- unlist(lapply(str_locate_all(urls, "/"),"[[", 3))

slash_3 <- substring(urls, first = 1, last = slash_stop - 1)

# replace the ones with 2 slashes:

blogs <- slash_3; blogs[id] <- slash_2

# dismiss:

blogs <- blogs[blogs != "http://domain"]

###################### End get r-blogger's URLs: #############################

###################### Begin Google Search: ##################################

# with lapply google mocks about roboting the site..

# I'm blocked on the 300th recursion..

# unlist(lapply(blogs, GoogleHits.1))

# try splitting, doesn't work (blocked the same as before)

res1 <- unlist(lapply(blogs[1:170], GoogleHits.1))

res2 <- unlist(lapply(blogs[171:334], GoogleHits.1))

# try to do it in 2 sessions (saving first result), or manually re-connnect host before second try:

df1 <- data.frame(Blogs = blogs[1:170], NoHits = res1, row.names = NULL)

save(df1, file = "df1.R")

load("df1.RData"); unlink("df1.RData")

# second run:

df2 <- data.frame(Blogs = blogs[171:334], NoHits = res2, row.names = NULL)

# bind dfs, sort by NoHits: